library(dplyr)

library(ggplot2)

library(moderndive)

library(broom)

library(skimr)Regression

Load library packages

Data

Data are from the {moderndive} package1, Modern dive (Kim, Ismay, and Kuhn 2021), and the {dplyr} package (Wickham et al. 2023).

evals_ch5 <- evals %>%

select(ID, score, bty_avg, age)

evalsID <int> | prof_ID <int> | score <dbl> | age <int> | bty_avg <dbl> | gender <fct> | ethnicity <fct> | language <fct> | |

|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 4.7 | 36 | 5.000 | female | minority | english | |

| 2 | 1 | 4.1 | 36 | 5.000 | female | minority | english | |

| 3 | 1 | 3.9 | 36 | 5.000 | female | minority | english | |

| 4 | 1 | 4.8 | 36 | 5.000 | female | minority | english | |

| 5 | 2 | 4.6 | 59 | 3.000 | male | not minority | english | |

| 6 | 2 | 4.3 | 59 | 3.000 | male | not minority | english | |

| 7 | 2 | 2.8 | 59 | 3.000 | male | not minority | english | |

| 8 | 3 | 4.1 | 51 | 3.333 | male | not minority | english | |

| 9 | 3 | 3.4 | 51 | 3.333 | male | not minority | english | |

| 10 | 4 | 4.5 | 40 | 3.167 | female | not minority | english |

evals_ch5ID <int> | score <dbl> | bty_avg <dbl> | age <int> | |

|---|---|---|---|---|

| 1 | 4.7 | 5.000 | 36 | |

| 2 | 4.1 | 5.000 | 36 | |

| 3 | 3.9 | 5.000 | 36 | |

| 4 | 4.8 | 5.000 | 36 | |

| 5 | 4.6 | 3.000 | 59 | |

| 6 | 4.3 | 3.000 | 59 | |

| 7 | 2.8 | 3.000 | 59 | |

| 8 | 4.1 | 3.333 | 51 | |

| 9 | 3.4 | 3.333 | 51 | |

| 10 | 4.5 | 3.167 | 40 |

evals_ch5 %>%

summary() ID score bty_avg age

Min. : 1.0 Min. :2.300 Min. :1.667 Min. :29.00

1st Qu.:116.5 1st Qu.:3.800 1st Qu.:3.167 1st Qu.:42.00

Median :232.0 Median :4.300 Median :4.333 Median :48.00

Mean :232.0 Mean :4.175 Mean :4.418 Mean :48.37

3rd Qu.:347.5 3rd Qu.:4.600 3rd Qu.:5.500 3rd Qu.:57.00

Max. :463.0 Max. :5.000 Max. :8.167 Max. :73.00 skimr::skim(evals_ch5)| Name | evals_ch5 |

| Number of rows | 463 |

| Number of columns | 4 |

| _______________________ | |

| Column type frequency: | |

| numeric | 4 |

| ________________________ | |

| Group variables | None |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| ID | 0 | 1 | 232.00 | 133.80 | 1.00 | 116.50 | 232.00 | 347.5 | 463.00 | ▇▇▇▇▇ |

| score | 0 | 1 | 4.17 | 0.54 | 2.30 | 3.80 | 4.30 | 4.6 | 5.00 | ▁▁▅▇▇ |

| bty_avg | 0 | 1 | 4.42 | 1.53 | 1.67 | 3.17 | 4.33 | 5.5 | 8.17 | ▃▇▇▃▂ |

| age | 0 | 1 | 48.37 | 9.80 | 29.00 | 42.00 | 48.00 | 57.0 | 73.00 | ▅▆▇▆▁ |

Correlation

strong correlation

Using the cor function to show correlation. For example see the strong correlation between starwars$mass to starwars$height

my_cor_df <- starwars %>%

filter(mass < 500) %>%

summarise(my_cor = cor(height, mass))

my_cor_dfmy_cor <dbl> | ||||

|---|---|---|---|---|

| 0.7612612 |

The cor() function shows a positive correlation of 0.7612612. This indicates a positive correlation between height and mass.

weak correlation

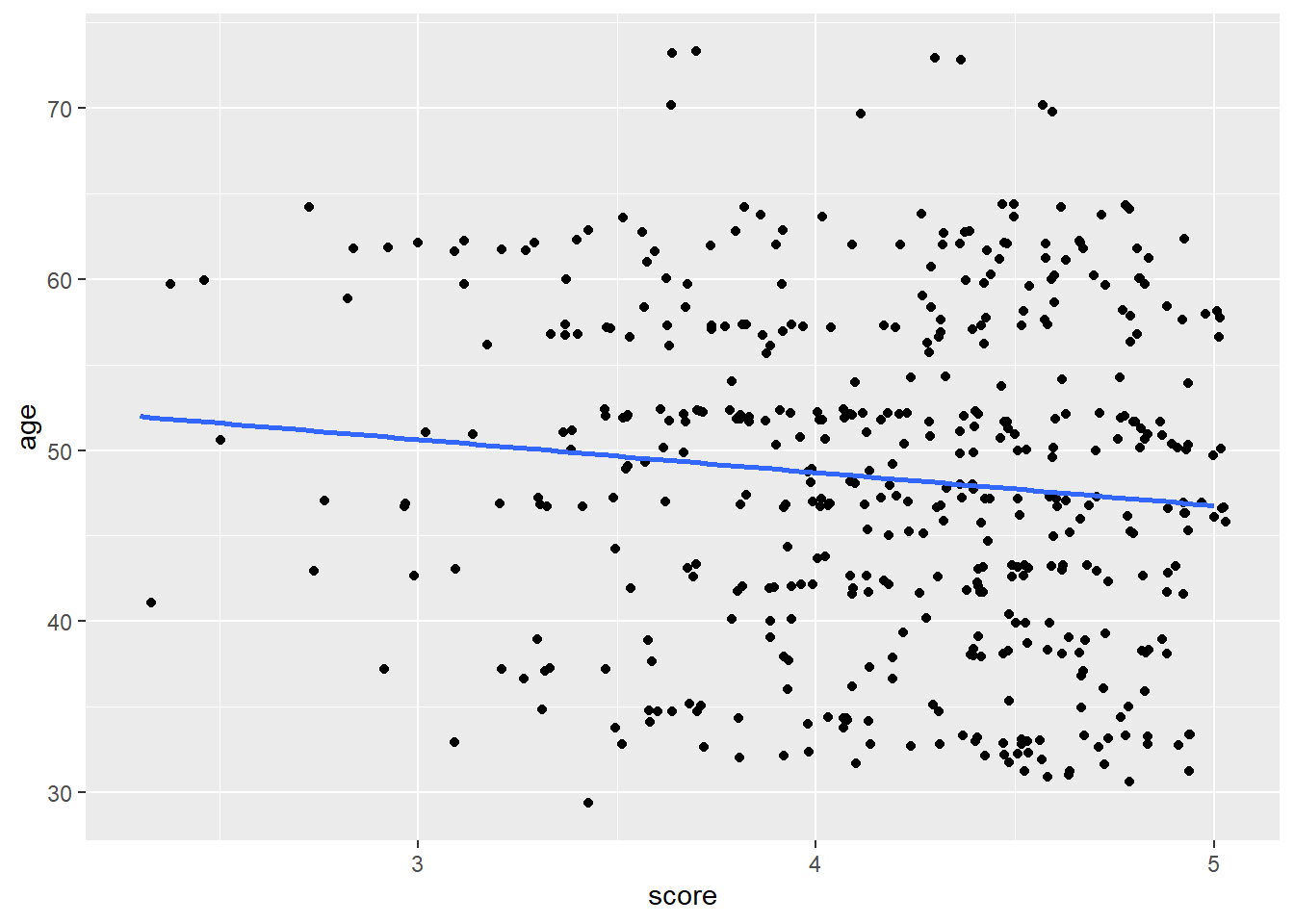

By contrast, see here a regression line that visualizes the weak correlation between evals_ch5$score and evals_ch5$age

evals_ch5 %>%

ggplot(aes(score, age)) +

geom_jitter() +

geom_smooth(method = lm, formula = y ~ x, se = FALSE)

Linear model

For every increase of 1 unit increase in bty_avg, there is an associated increase of, on average, 0.067 units of score. from ModenDive (Kim, Ismay, and Kuhn 2021)

# Fit regression model:

score_model <- lm(score ~ bty_avg, data = evals_ch5)

score_model

Call:

lm(formula = score ~ bty_avg, data = evals_ch5)

Coefficients:

(Intercept) bty_avg

3.88034 0.06664 summary(score_model)

Call:

lm(formula = score ~ bty_avg, data = evals_ch5)

Residuals:

Min 1Q Median 3Q Max

-1.9246 -0.3690 0.1420 0.3977 0.9309

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.88034 0.07614 50.96 < 2e-16 ***

bty_avg 0.06664 0.01629 4.09 5.08e-05 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.5348 on 461 degrees of freedom

Multiple R-squared: 0.03502, Adjusted R-squared: 0.03293

F-statistic: 16.73 on 1 and 461 DF, p-value: 5.083e-05Broom

The {broom} package is useful for containing the outcomes of some models as data frames. A more holistic approach to tidy modeling is to use the {tidymodels} package/approach

Tidy the model fit into a data frame with broom::tidy(), then we can use dplyr functions for data wrangling.

broom::tidy(score_model)term <chr> | estimate <dbl> | std.error <dbl> | statistic <dbl> | p.value <dbl> |

|---|---|---|---|---|

| (Intercept) | 3.88033795 | 0.07614297 | 50.961212 | 1.561043e-191 |

| bty_avg | 0.06663704 | 0.01629115 | 4.090382 | 5.082731e-05 |

get evaluative measure into a data frame

broom::glance(score_model)r.squared <dbl> | adj.r.squared <dbl> | sigma <dbl> | statistic <dbl> | p.value <dbl> | df <dbl> | |

|---|---|---|---|---|---|---|

| 0.03502226 | 0.03292903 | 0.5348351 | 16.73123 | 5.082731e-05 | 1 |

More model data

predicted scores can be found in the .fitted variable below

broom::augment(score_model)score <dbl> | bty_avg <dbl> | .fitted <dbl> | .resid <dbl> | .hat <dbl> | .sigma <dbl> | |

|---|---|---|---|---|---|---|

| 4.7 | 5.000 | 4.213523 | 0.486476860 | 0.002474270 | 0.5349343 | |

| 4.1 | 5.000 | 4.213523 | -0.113523140 | 0.002474270 | 0.5353899 | |

| 3.9 | 5.000 | 4.213523 | -0.313523140 | 0.002474270 | 0.5352160 | |

| 4.8 | 5.000 | 4.213523 | 0.586476860 | 0.002474270 | 0.5347157 | |

| 4.6 | 3.000 | 4.080249 | 0.519750934 | 0.004025008 | 0.5348652 | |

| 4.3 | 3.000 | 4.080249 | 0.219750934 | 0.004025008 | 0.5353177 | |

| 2.8 | 3.000 | 4.080249 | -1.280249066 | 0.004025008 | 0.5320648 | |

| 4.1 | 3.333 | 4.102439 | -0.002439199 | 0.003251767 | 0.5354161 | |

| 3.4 | 3.333 | 4.102439 | -0.702439199 | 0.003251767 | 0.5344102 | |

| 4.5 | 3.167 | 4.091377 | 0.408622549 | 0.003611506 | 0.5350758 |

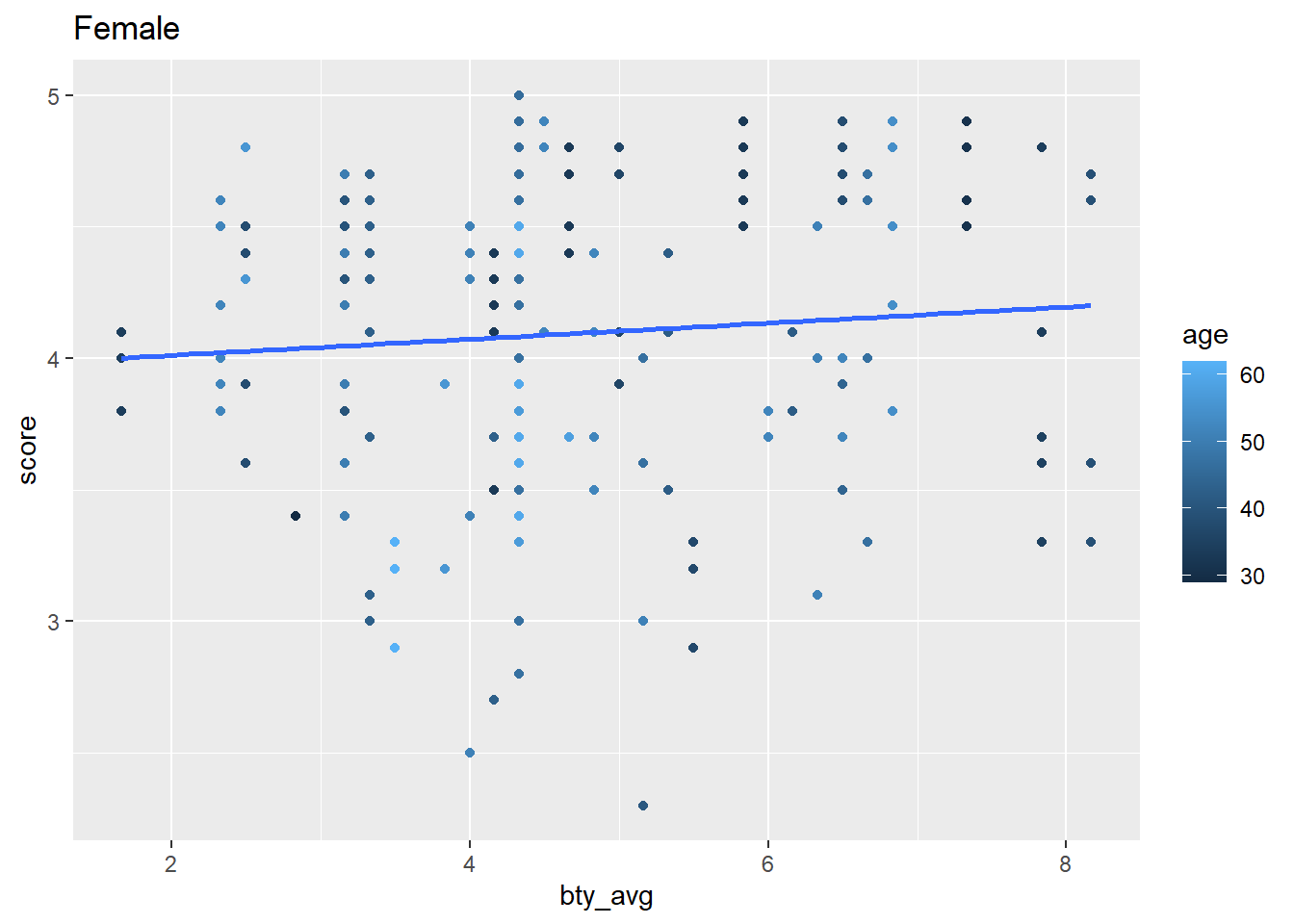

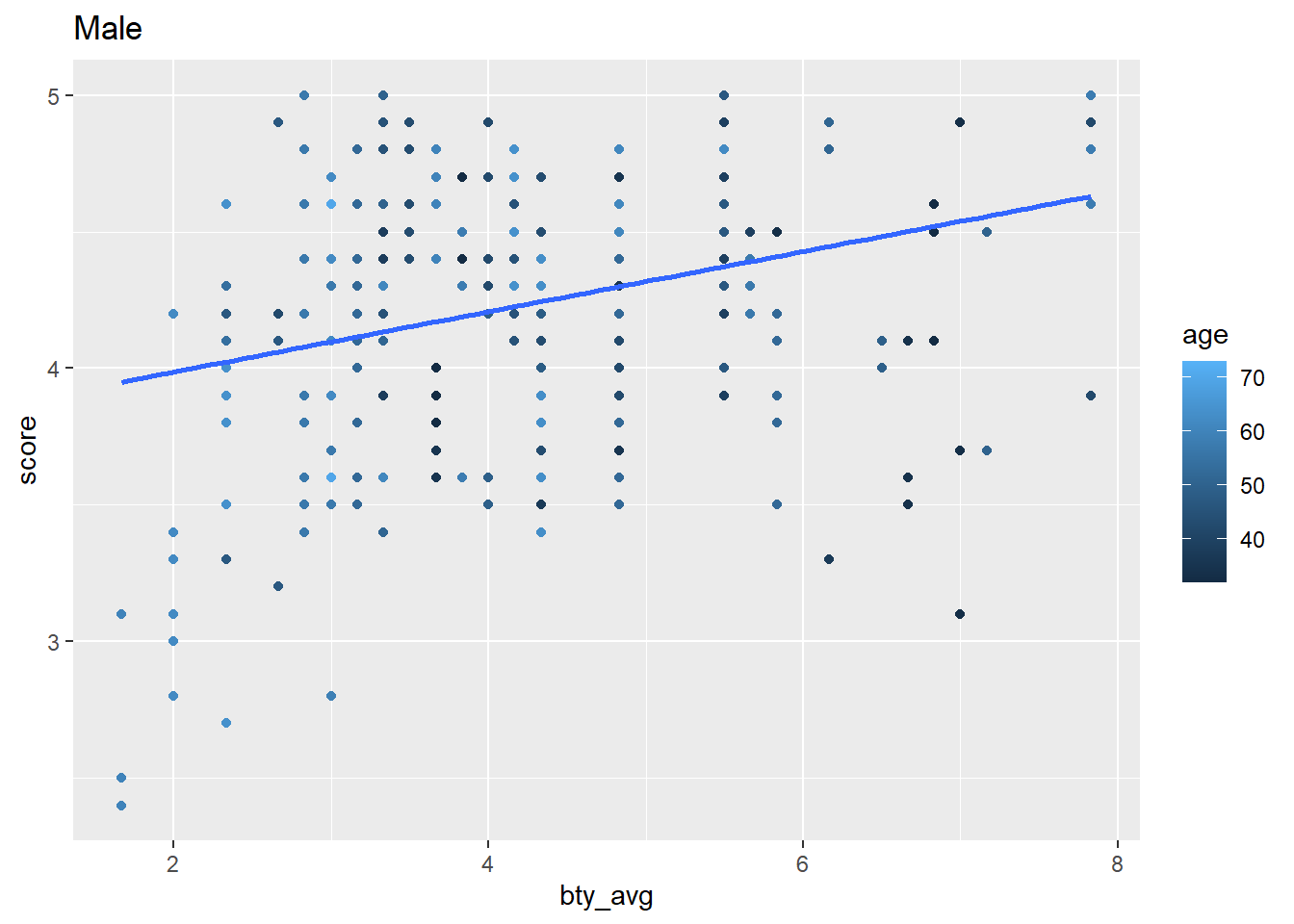

Example of iterative modeling with nested categories of data

In this next example we nest data by the gender category, then iterate over those categories using the {purrr} package to map anonymous functions over our data frames that is nested by our category. Look closely and you’ll see correlations, linear model regression, and visualizations — iterated over the gender category. purr::map iteration methods are beyond what we’ve learned so far, but you can notice how tidy-data and tidyverse principles are leveraged in data wrangling and analysis. In future lessons we’ll learn how to employ these techniques along with writing custom functions.

library(tidyverse)

my_iterations <- evals |>

janitor::clean_names() |>

nest(data = -gender) |>

mutate(cor_age = map_dbl(data, \(data) cor(data$score, data$age))) |>

mutate(cor_bty = map_dbl(data, \(data) cor(data$score, data$bty_avg))) |>

mutate(my_fit_bty = map(data, \(data) lm(score ~ bty_avg, data = data) |>

broom::tidy())) |>

mutate(my_plot = map(data, \(data) ggplot(data, aes(bty_avg, score)) +

geom_point(aes(color = age)) +

geom_smooth(method = lm,

se = FALSE,

formula = y ~ x))) |>

mutate(my_plot = map2(my_plot, gender, \(my_plot, gender) my_plot +

labs(title = str_to_title(gender))))This generates a data frame consisting of lists columns such as my_fit_bty and my_plot

```{r}

my_iterations

```gender <fct> | data <list> | cor_age <dbl> | cor_bty <dbl> | my_fit_bty <list> | my_plot <list> |

|---|---|---|---|---|---|

| female | <tibble[,13]> | -0.26517575 | 0.08547868 | <tibble[,5]> | <S3: gg> |

| male | <tibble[,13]> | -0.07645422 | 0.31093697 | <tibble[,5]> | <S3: gg> |

my_terations$my_fit_bty is a list column consisting of tibble-style data frames. We can unnest those data frames.

```{r}

my_iterations |>

unnest(my_fit_bty)

```gender <fct> | data <list> | cor_age <dbl> | cor_bty <dbl> | term <chr> | |

|---|---|---|---|---|---|

| female | <tibble[,13]> | -0.26517575 | 0.08547868 | (Intercept) | |

| female | <tibble[,13]> | -0.26517575 | 0.08547868 | bty_avg | |

| male | <tibble[,13]> | -0.07645422 | 0.31093697 | (Intercept) | |

| male | <tibble[,13]> | -0.07645422 | 0.31093697 | bty_avg |

my_iterations$my_plot is a list column consisting of ggplot2 objects. We can pull those out of the data frame.

```{r}

my_iterations |>

pull(my_plot)

```

Next steps

The example above introduces how multiple models can be fitted through the nesting of data. Of course, modeling can be much more complex. A good next step is to gain basic introductions about tidymodels. You’ll gain tips on integrating tidyverse principles with modeling, machine learning, feature selection, and tuning. Alternatively, endeavor to increase your skills in iteration using the purrr package so you can leverage iteration with custom functions.